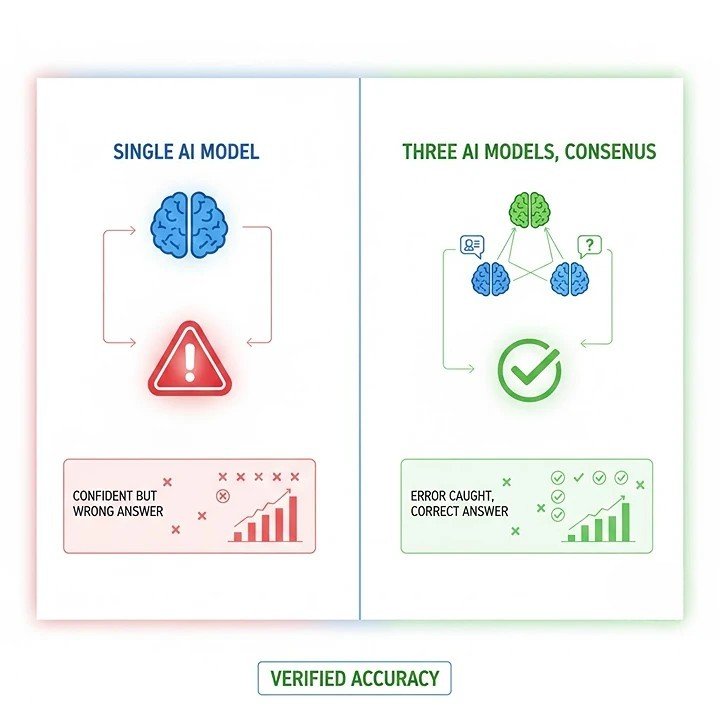

- Why running three different AI models together beats using just one

- How Perplexity’s new system catches mistakes before you see them

- The fascinating way GPT-5.2, Claude, and Gemini argue until they agree

- Why this approach matters more than any single breakthrough model

- Real examples showing how cross-validation stops AI from making stuff up

- What this means for businesses that depend on accurate AI information

Perplexity launched the Model Council on February 7, 2026. This system runs GPT-5.2, Claude, and Gemini together simultaneously. Each model generates its own answer independently. Then the system compares all three responses side by side. Users see where models agree and disagree clearly. This dramatically reduces hallucination errors that plague single-model systems. Think of it like getting three expert opinions before making important decisions.

Why One AI Brain Isn’t Enough Anymore

Here’s something that bothers me about using ChatGPT or Claude alone. Sometimes they sound incredibly confident while being completely wrong. You ask a simple factual question, and boom—you get a detailed answer that seems perfect. Except it’s not.

I’ve been there. We’ve all been there, right? You trust the AI, use the information, and later discover it was nonsense. That’s the hallucination problem everyone talks about, but nobody really solves.

Well, Perplexity just did something genuinely clever about this. Instead of making one AI smarter, they made multiple AIs work together. It’s like having three really smart friends who each excel at different things. Double-check your homework before you turn it in.

Honestly? This feels like the first real solution I’ve seen.

How Model Council Actually Works

So here’s what Perplexity built. When you ask a question, three separate AI models tackle it simultaneously. We’re talking about the big names here—GPT-5.2 from OpenAI, Claude from Anthropic, and Gemini from Google.

Each one generates its own complete answer independently. They don’t see what the others are saying yet. This matters because you want genuine diversity of thought, not groupthink.

Then comes the interesting part. The system lays out all three answers side by side. It highlights where they agree strongly. It also shows where they disagree and why. Users can see the full picture before deciding what to trust.

Think about it like this. If all three models say the same thing, you can feel pretty confident. But if one model contradicts the others, you know to be cautious. And when they all disagree? That tells you the question itself might be tricky or poorly defined.

This is way smarter than just picking the “best” model and hoping it’s right.

The AI United Nations Comparison

Someone described Model Council as creating an AI United Nations. That comparison actually works pretty well. You’ve got representatives from different camps sitting around a conference table, each bringing their own perspective and expertise.

GPT-5.2 might excel at creative reasoning. Claude could be better at technical accuracy. Gemini might understand context more deeply. When they all weigh in, you get a more complete picture than any single model provides.

But here’s what I find fascinating. They’re not just voting on answers. The system shows you their reasoning processes, too. So you can understand why each model reached its conclusion. That transparency matters enormously for building trust.

Nobody wants a black box telling them what to believe. We want to understand the thinking behind the answer.

Real-World Impact on Hallucination Errors

Perplexity claims this approach significantly reduces hallucination errors. That’s the technical term for when AI confidently makes stuff up. It happens more often than people realize, and it’s been a massive problem.

Let me give you an example of how cross-validation helps. Say you ask about a recent scientific study. GPT might reference research that sounds plausible but doesn’t actually exist. Claude might catch that the dates don’t match up. Gemini could verify the actual published papers.

When you see all three perspectives, the fake study gets exposed immediately. You’re not misled because the system caught the error before presenting it to you.

This matters tremendously for professionals relying on AI. Lawyers need accurate case citations. Doctors need correct medical information. Researchers need real data, not hallucinated references.

Single-model systems simply can’t provide that level of reliability yet. But committees of models? That’s showing genuine promise.

Why This Beats the Bigger-Is-Better Approach

The AI industry has been obsessed with building bigger models: more parameters, more training data, more computing power. And sure, that’s pushed capabilities forward in important ways.

But Perplexity took a completely different approach here. Instead of making a single model more powerful, they intelligently combined three existing models. The innovation isn’t in the models themselves—it’s in how they work together.

I think this matters because it’s actually achievable right now. You don’t need to wait for GPT-6 or Claude 5. You can implement multi-model systems today using current technology.

Plus, there’s something inherently more trustworthy about consensus. When multiple independent systems agree on something, that’s meaningful validation. When they disagree, that’s meaningful uncertainty you need to know about.

The Technical Implementation Challenge

Now, running three models simultaneously isn’t trivial from an engineering perspective. You’re essentially tripling your computational costs per query. That’s expensive.

Perplexity had to build an infrastructure handling multiple model APIs efficiently. They needed to manage the timing so all three responses arrive at reasonable speeds. And they had to create an interface that presents three answers without overwhelming users.

That’s genuinely hard to do well. But they seem to have pulled it off based on early user reports. The system stays responsive while providing that additional validation layer.

From a business model standpoint, this probably means higher subscription costs for users. You can’t run three models for the price of one. But if the improved accuracy justifies the premium, people will pay it.

Especially enterprise customers who need reliability more than low prices.

What Enterprise Customers Gain Here

Let’s talk about why businesses should care about this. Companies are increasingly dependent on AI for research, analysis, and decision support. But trusting a single model creates risk.

Imagine you’re a financial analyst using AI to research investment opportunities. If the AI hallucinates data about a company’s performance, that could lead to seriously bad decisions. Money gets lost. Careers get damaged.

With Model Council’s approach, you get built-in fact-checking. If GPT says one thing and Claude says something contradictory, you know to dig deeper. You’re not blindly trusting a single source.

This reduces the risk profile substantially for enterprise AI deployment. And that matters because many companies have held back specifically due to reliability concerns.

Now they have a path forward that actually addresses their worries.

The Future: More Committee-Style AI Systems

I predict we’ll see a lot more systems adopting this multi-model approach. It just makes too much sense not to copy.

Microsoft might combine its own models with OpenAI’s for validation. Google could run Gemini alongside other internal models. Amazon will probably implement something similar with Bedrock.

The era of single-model AI responses might actually be ending. We’re moving toward systems that automatically cross-reference and validate before presenting information.

That’s a fundamentally healthier way to build AI products. It acknowledges that no single model is perfect. It builds in checks and balances from the start.

Potential Limitations to Consider

Of course, this approach isn’t perfect either. What happens when all three models are wrong about the same thing? That can happen if they’re all trained on similar flawed data.

There’s also the question of update cycles. If GPT-5.2 updates but Claude and Gemini don’t, you might get inconsistent knowledge. Managing version control across three separate models gets complicated fast.

And then there’s the computational cost issue I mentioned earlier. Not every use case justifies running three models. Sometimes you just need a quick answer, and perfect accuracy doesn’t matter that much.

So Model Council probably makes most sense for high-stakes queries where correctness really matters. For casual chatting or brainstorming? A single model might be fine.

Frequently Asked Questions

Single models hallucinate information regularly while sounding confident. Running multiple models together catches these errors before users see them. When GPT-5.2, Claude, and Gemini all agree on something, you can trust it more. When they disagree, you know to be careful.

This approach provides built-in fact-checking automatically. You get multiple expert opinions on every question. That’s far more reliable than trusting one source alone.

The system uses three frontier models currently. GPT-5.2 comes from OpenAI’s latest release. Claude represents Anthropic’s top offering. Gemini is Google’s flagship model.

Each excels at different things naturally. GPT handles creative tasks well. Claude shows strong technical accuracy. Gemini understands context deeply.

Together, they cover each other’s weaknesses effectively. This diversity creates more complete answers overall.

Running three models simultaneously definitely costs more than running one. Computational expenses increase significantly with this approach.

However, Perplexity seems to think the value justifies the premium. Enterprise customers need reliability more than low prices, typically. They’ll pay more for accuracy.

For casual users, single-model access will probably remain available. Model Council might be a premium feature instead.

Yes, this remains possible in certain situations. If all three models were trained on similar flawed data, they might all repeat the same errors.

Cross-validation catches individual model mistakes well. But it won’t catch systematic problems affecting the entire training set.

That’s why human judgment still matters fundamentally. AI committees help, but don’t replace critical thinking completely.

Almost certainly yes, I’d bet on it. The concept makes too much sense to ignore.

Microsoft could combine its models with OpenAI’s. Google might run multiple Gemini versions together. Amazon will probably implement something similar.

We’re likely seeing the start of a new standard. Single-model responses might become outdated pretty quickly now.